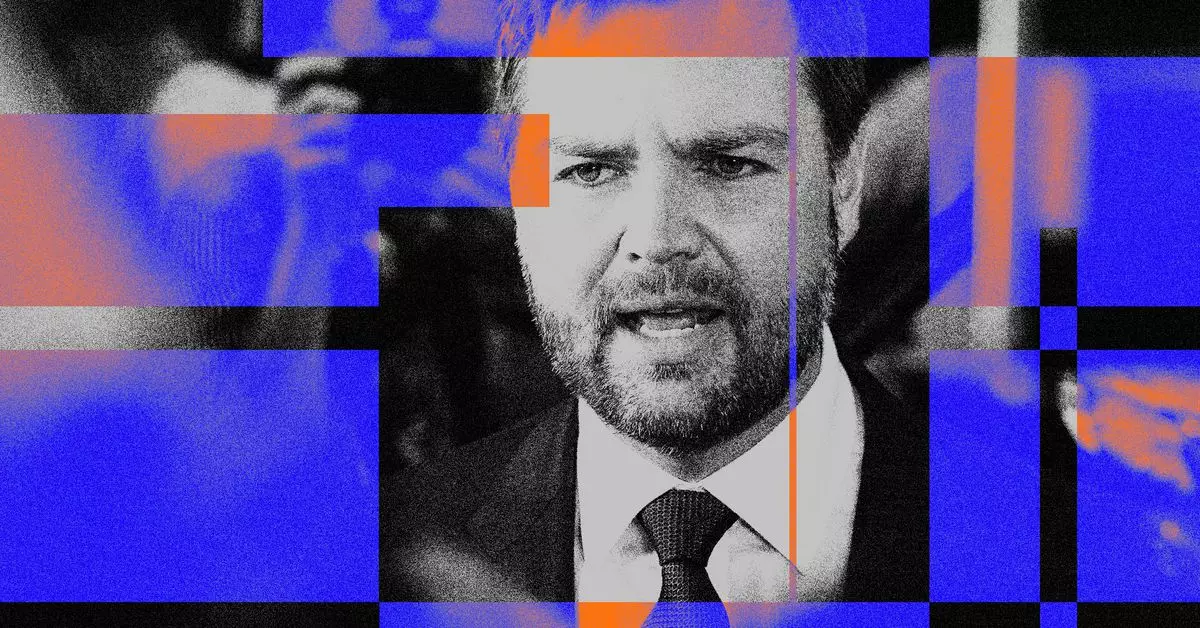

In an age where information spreads like wildfire, social media platforms play a pivotal role in shaping narratives and public opinion. Recently, Meta, the parent company of Facebook, Instagram, and Threads, has taken a controversial step by restricting access to links pertaining to Ken Klippenstein’s newsletter. This newsletter reportedly contains sensitive information—a dossier on JD Vance allegedly obtained through a hack involving the Iranian government targeting the Trump campaign. Meta’s intervention raises significant questions about censorship and the ethical boundaries of information dissemination.

Meta’s Position and Policy Enforcement

Meta spokesperson Dave Arnold stated that the company’s decision aligns with its policies that prohibit content from hacked sources and material linked to foreign influence operations during electoral processes. This stance is ostensibly grounded in a commitment to uphold the integrity of information circulated on its platforms. The measures include removing or disabling posts linked to the controversial dossier, reflecting a stringent adherence to community standards intended to mitigate the impact of compromised or potentially harmful data.

These restrictions extend beyond just blocking links to the dossier. Various users on Threads have reported that their attempts to share the document have been met with deletions or link disabling, stifling a growing conversation surrounding the implications of the revelations contained within Klippenstein’s work. Such actions raise concerns about the implications of censorship exercised by a media conglomerate that significantly influences public dialogue.

In the wake of Meta’s censorship, users have found themselves navigating around these newly imposed limitations. Attempts to share the dossier often result in creative workarounds—posts with altered URLs, unconventional formatting, or even the use of QR codes to bypass the blocks. This resourcefulness highlights the persistent human urge to seek and share information, even in the face of corporate restrictions.

Interestingly, the reaction on other platforms like X (formerly Twitter) shows a similar pattern. Users have reported similar obstacles when trying to disseminate the controversial document, hinting at a broader trend of restrictive measures across social media. This consistency suggests a collective apprehension among large tech companies regarding the implications of misinformation, hacking, and foreign influence, regardless of the specific content in question.

The actions undertaken by Meta and other platforms reflect a growing trend of censorship that could potentially stifle free expression and access to information. As each platform defines its policies against the backdrop of societal expectations and legal regulations, the balance between protecting users and preserving open discourse becomes increasingly complex.

Questions arise about who gets to decide what constitutes harmful or misleading information and how these decisions might sway public discourse. Is it the responsibility of social media companies to filter content, or should users be entrusted to discern credible information independently? This ongoing debate spotlights the tension between corporate accountability and user autonomy in an era where information is both a powerful tool and a potential weapon.

As Meta continues to enforce these stringent measures, the implications for public discourse and access to information remain profound. Users are left grappling with navigating a digital landscape where information control is increasingly prevalent. Such controls might protect against disinformation, but they also risk eroding the fabric of open dialogue that underpins a democratic society. The need for nuanced discussions about ethics, responsibility, and the role of technology in modern communication is more urgent than ever, urging society to question where the line should be drawn in the quest for transparency versus the need for protection.